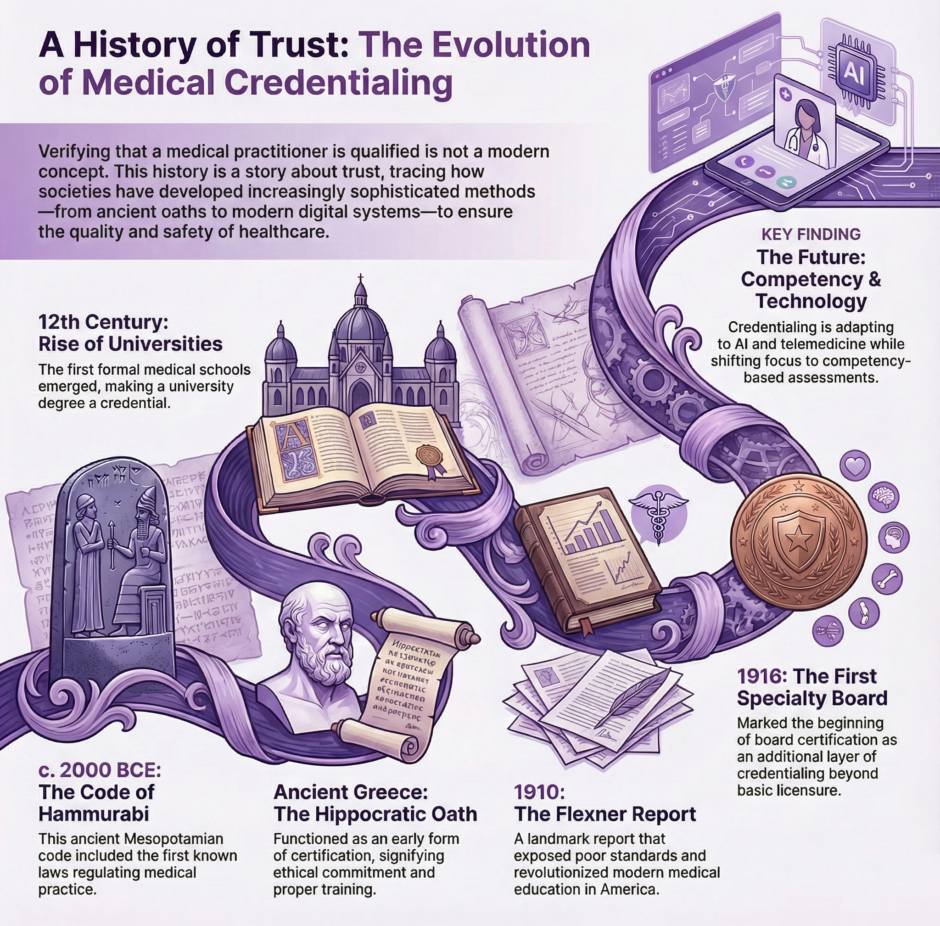

Let’s take a journey through the history of medical credentialing. A story that’s as old as medicine itself. You might think medical licenses are a modern invention, but people have been trying to figure out who’s qualified to practice medicine for thousands of years. It’s a tale that reveals a lot about how we’ve approached healthcare throughout history. Some of it might surprise you.

Ancient Beginnings: The First Medical Credentials

You know how today we have medical boards and licensing exams? Well, the earliest known system for qualifying healers dates back to ancient Mesopotamia, around 2000 BCE. The Code of Hammurabi included specific laws about medical practice, though they were a bit more dramatic than today’s regulations. If a physician’s treatment led to a patient’s death, they might lose their hands! Talk about high stakes medical practice.

You know how today we have medical boards and licensing exams? Well, the earliest known system for qualifying healers dates back to ancient Mesopotamia, around 2000 BCE. The Code of Hammurabi included specific laws about medical practice, though they were a bit more dramatic than today’s regulations. If a physician’s treatment led to a patient’s death, they might lose their hands! Talk about high stakes medical practice.

But it was really the ancient Greeks who started formalizing medical education in a way we’d recognize today. The Hippocratic Oath, which you’ve probably heard of, was actually one of the earliest forms of medical credentialing. When physicians took this oath, it served as a sort of ancient certification, telling the public that this person had been properly trained and would follow certain ethical principles.

The Greeks also established the first organized medical schools. The most famous was on the island of Cos, where Hippocrates taught. Students would study for years under experienced physicians, learning through a combination of theoretical knowledge and practical experience, not so different from today’s medical residencies, when you think about it.

Medieval Medicine: Guilds and Universities

The Middle Ages brought some interesting developments in medical credentialing. In medieval Europe, medicine became organized through guilds, just like other trades. These guilds were essentially the first professional medical organizations, setting standards for who could practice medicine and how they should be trained.

The really big change came with the rise of universities in the 12th and 13th centuries. The University of Salerno in Italy was the first to establish a formal medical school, and others quickly followed. To practice medicine, you needed a degree from one of these universities – though enforcement was, shall we say, a bit spotty. The interesting thing is that these medieval medical degrees were often more standardized than what you’d find in later centuries.

Here’s a fun fact: medieval medical students had to pass public examinations where anyone could ask them questions. Imagine having to defend your medical knowledge not just to professors, but to random people off the street! It was like a medical version of an AMA (Ask Me Anything) session.

The Renaissance: A Time of Change and Conflict

The Renaissance period saw a real shake-up in medical credentialing. With the invention of the printing press, medical knowledge became more widely available, and this led to some interesting tensions. You had university-trained physicians competing with all sorts of other healers, barber-surgeons, midwives, herbalists, and more.

This period also saw the rise of royal colleges of physicians, like the Royal College of Physicians in London, founded in 1518. These organizations were given the power to grant licenses and regulate medical practice. But there was often a big gap between what the law said and what actually happened on the ground. In many places, especially rural areas, people still relied heavily on unlicensed practitioners.

The Modern Era Takes Shape: 18th and 19th Centuries

The modern system of medical credentialing really started taking shape in the 18th and 19th centuries. This was when medicine began to become more scientific and standardized. The old system of apprenticeships and guild membership started giving way to formal medical education and state licensing.

In America, the story gets particularly interesting. In the early days of the United States, basically anyone could call themselves a doctor. There were no real standards or requirements. This led to what medical historians call the “heroic age” of medicine, where various competing schools of thought, some rather questionable by today’s standards, all claimed to have the answer to medical treatment.

The situation started to change in the 1830s when states began passing medical licensing laws. But here’s the catch, these laws were actually repealed in many states by the 1850s. Why? Because of a widespread belief that requiring licenses was anti-democratic and created unfair monopolies. It’s a debate that in some ways still echoes today in discussions about healthcare regulation.

The Revolution in Medical Education

The real turning point came in 1910 with the publication of the Flexner Report. This report, commissioned by the Carnegie Foundation, evaluated medical schools across the United States and Canada, and what it found was pretty shocking. Many medical schools were little more than diploma mills, with no labs, no clinical facilities, and sometimes not even any patients for students to learn from.

The Flexner Report led to massive reforms in medical education. Many substandard medical schools were closed, and those that remained had to meet much higher standards. This is when we started seeing the modern system of medical education take shape: a four-year medical degree following a bachelor’s degree, with standardized curricula and clinical training requirements.

The Rise of Specialization and Board Certification

The 20th century saw another major development in medical credentialing: the rise of medical specialties and board certification. The American Board of Ophthalmology, established in 1916, was the first specialty board. Today, there are dozens of specialty boards, each with its own certification requirements.

This development reflected the growing complexity of medicine. As medical knowledge expanded, it became impossible for any one doctor to master everything. Specialization was the natural response, but it created new challenges for credentialing. How do you verify that someone is qualified in a specific area of medicine?

The answer was board certification, which became an additional layer of credentialing on top of basic medical licensure. It’s worth noting that board certification is voluntary, you can practice medicine with just a license, but it’s become increasingly important in many settings.

The Digital Revolution and Modern Challenges

Today, medical credentialing has entered the digital age. Electronic verification systems have made it easier to check credentials and track continuing education requirements. But they’ve also created new challenges. How do you verify online medical education? How do you credential telemedicine providers who might practice across state lines?

The COVID-19 pandemic brought some of these issues to the forefront. Many states temporarily modified their credentialing requirements to allow out-of-state physicians to help during the crisis. This has led to ongoing discussions about whether our current state-by-state licensing system makes sense in an increasingly connected world.

International Perspectives

It’s fascinating to look at how different countries handle medical credentialing. In some European countries, for instance, medical education is undergraduate level, students enter medical school right after high school. The United Kingdom has a system of “provisional registration” for new doctors, followed by “full registration” after completing additional training.

In many developing countries, the challenge is different, how do you maintain high standards while also ensuring there are enough healthcare providers to serve the population? Some countries have developed innovative solutions, like Cuba’s system of medical education, which trains doctors from many other countries.

Current Trends and Future Directions

Several trends are shaping the future of medical credentialing. One is the move toward competency-based education and assessment, rather than just time-based requirements. The idea is that what matters is what you can do, not just how long you’ve spent training.

Several trends are shaping the future of medical credentialing. One is the move toward competency-based education and assessment, rather than just time-based requirements. The idea is that what matters is what you can do, not just how long you’ve spent training.

Another trend is the increasing focus on maintaining competency throughout a physician’s career. Continuing medical education requirements have been around for a while, but there’s growing interest in more rigorous ways to ensure doctors keep their skills up to date.

There’s also increasing attention to “soft skills” and cultural competency. Modern medical credentialing is starting to look at things like communication skills and cultural awareness, not just medical knowledge and technical skills.

The Future of Medical Credentialing

Several questions loom large for medical credentialing:

- How will artificial intelligence and other new technologies change medical practice, and how should credentialing adapt?

- Should we move toward a more national or even international system of medical licensing?

- How do we balance the need for high standards with the need for access to care?

Some interesting innovations are already emerging. There are experiments with “micro-credentials” for specific skills or procedures. Virtual reality is being used in both training and assessment. And there’s growing interest in ways to make credentialing more efficient without compromising quality.

Summary: Medical Credentialing History

The history of medical credentialing is really a story about trust, how society has tried to ensure that people providing medical care are qualified to do so. From the Code of Hammurabi to modern board certification, we’ve seen constant evolution in how we approach this challenge.

What’s particularly interesting is how many of the fundamental questions haven’t changed. How do we balance access to care with quality standards? How do we ensure practitioners stay up to date? How do we adapt credentialing systems to new medical knowledge and technologies?

The history of medical credentialing shows us that this is a challenge that each generation must address in its own way, adapting to new circumstances while building on the lessons of the past.